Transform information into personal knowledge

Your intelligent companion for meetings, lectures, and conversations.

AI Research Symposium Notes

Key Research Areas

- Multi-modal learning approaches

- Context-aware systems

- Cognitive science mechanisms

Frameworks

Dr. Tanaka's presentation on cognitive frameworks with ML approaches.

Reference: Tanaka et al. (2024)1

1. "Cognitive-Inspired Attention in LLMs"

Multi-modal learning involves training models on multiple types of data inputs (text, images, audio) simultaneously.

This helps models develop more robust representations by connecting concepts across different modalities.

The most promising aspect is how attention mechanisms in LLMs can be improved by cognitive science principles.

私たちの研究では、人間の視覚的注意の仕組みをモデルに組み込むことで、パフォーマンスが向上することが示されています。

(Translation: Our research shows that incorporating human visual attention mechanisms improves performance.)

Have you considered how this might apply to cross-modal learning?

Your companion for every conversation

Paralogue works for both in-person and online sessions, integrated with all meeting platforms, available exclusively to you, not as a meeting bot.

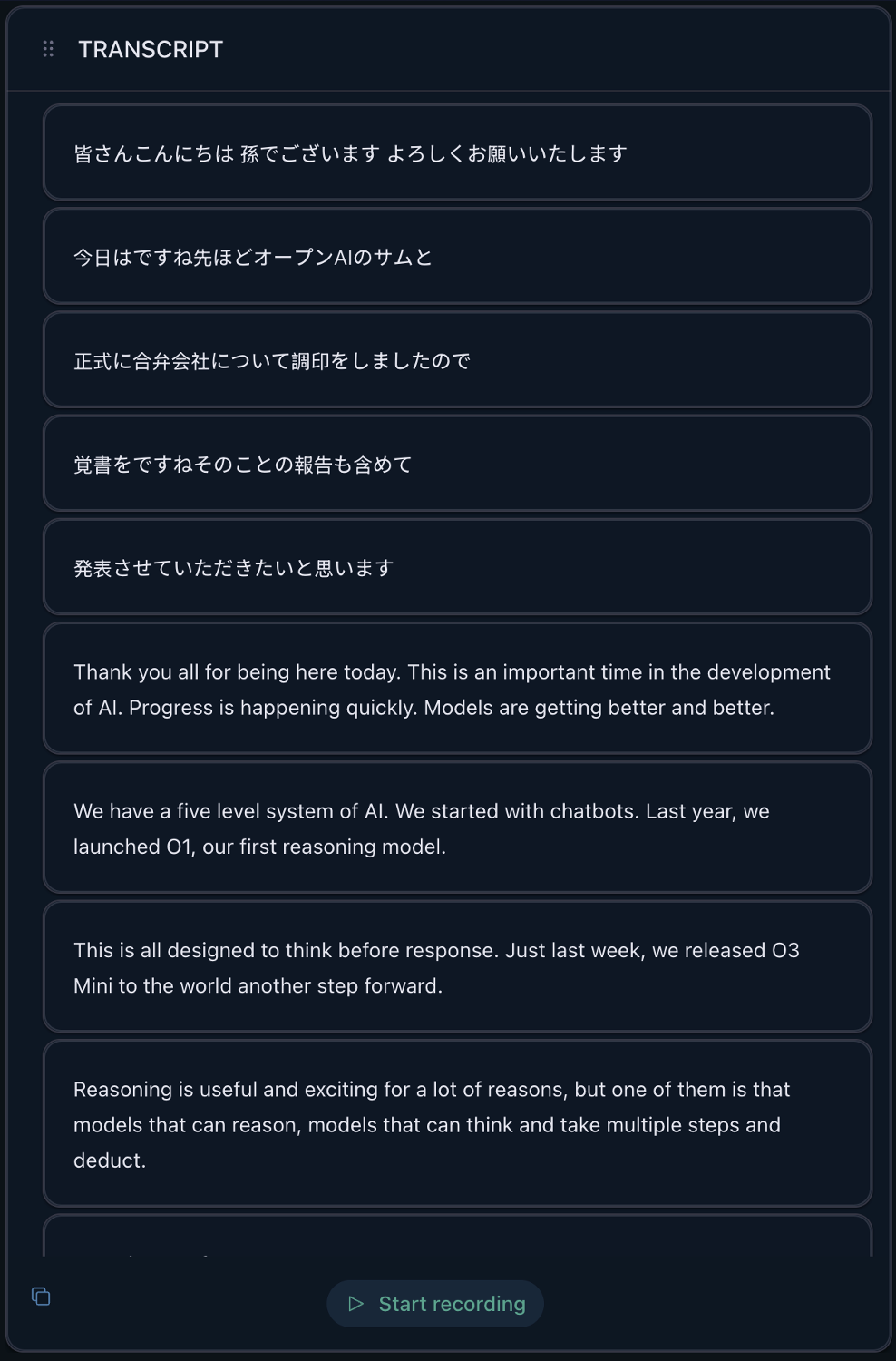

Real-time Transcription

Capture spoken content with industry-leading accuracy.

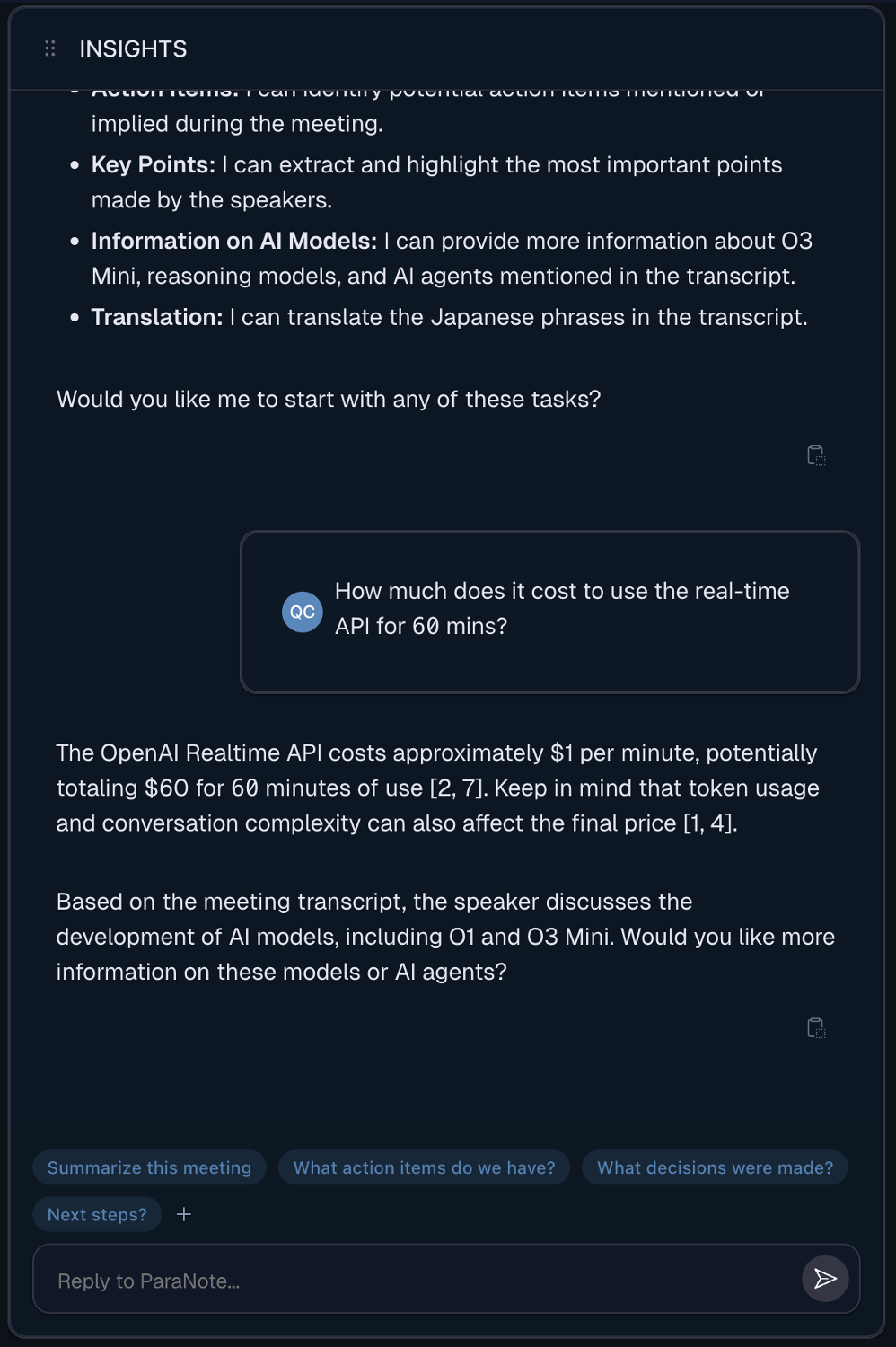

Contextual AI Assistance

Get instant insights and answers during your sessions.

Enhanced Note-Taking

Transform raw transcripts into structured knowledge.

Key Features

- •AI-enhanced note organization and structuring

- •Automatic action item extraction

- •Key point summarization and highlighting

Paralogue for everyone

From professional settings to personal projects.

Academic Research & Education

Transform complex lectures and research discussions into structured knowledge with powerful multilingual support. Capture technical terminology accurately while focusing on the ideas, not note-taking.

Key Benefits

- •Capture sophisticated discussions with field-specific terminology

- •Organize research findings with AI-enhanced structure

- •Study more effectively with contextual understanding

- •Focus on participation, not documentation

Business Professionals

Never lose valuable insights from meetings again. Track decisions, action items, and key discussion points across projects, people, and time periods without additional effort.

Key Benefits

- •Automatic action item extraction and assignment

- •Searchable history across all conversations

- •Seamless integration with existing meeting platforms

- •Cross-reference information from previous meetings

Language Learners

Accelerate language acquisition by capturing both native and translated content simultaneously. Get real-time explanations of grammar, vocabulary, and cultural context during conversations.

Key Benefits

- •Real-time translation alongside original text

- •Contextual explanations of unfamiliar terms

- •Build personal language learning database

- •Study conversations with pronunciation guidance

Creative Professionals

Capture inspiration whenever it strikes. Transform brainstorming sessions, interviews, and creative discussions into structured concepts without breaking your creative flow.

Key Benefits

- •Never lose a brilliant idea during rapid discussions

- •Organize creative concepts into workable outlines

- •Extract references and influences automatically

- •Focus on creation rather than documentation

Join the Waitlist

Be among the first to experience Paralogue and transform your conversations into knowledge.

We respect your privacy and will never share your information.